Relevance: GS Paper III (Science & Technology / Governance)

Source: Press Information Bureau, The Hindu

Artificial Intelligence (AI) is becoming one of the most powerful technologies of our time—shaping everything from education and healthcare to governance, finance and defence. But as AI grows, so do its risks—like bias, misinformation, deepfakes, privacy violations and job disruptions.

To address this, the Ministry of Electronics and Information Technology (MeitY) under the IndiaAI Mission released the AI Governance Guidelines (2025). These guidelines aim to ensure that AI is developed and used responsibly, safely, and for public good, while still encouraging innovation and investment.

What is AI Governance?

AI Governance means creating a framework of rules, standards, institutions, and ethical principles to guide how Artificial Intelligence is built, deployed, and monitored.

It focuses on ensuring that AI systems are:

- Safe, accountable and transparent,

- Respect human rights and privacy, and

- Encourage innovation without misuse.

Simply put, AI governance helps governments and industries use AI for progress—without harming people or society.

3. Why is AI Governance Needed?

AI is a double-edged sword. While it can boost productivity and decision-making, unregulated AI can cause bias, discrimination, security breaches, or misinformation.

Some major reasons for AI governance include:

- Algorithmic bias – AI may reflect social or gender bias from poor-quality data.

- Privacy and surveillance – Data misuse can violate personal rights.

- Transparency – “Black box” algorithms make it hard to understand how AI makes decisions.

- Safety and reliability – Uncontrolled AI in critical areas (like health, transport) can cause harm.

- Global competitiveness – Responsible AI ensures India remains globally credible and inclusive.

AI governance aligns with SDG 9 (Innovation), SDG 10 (Reduced Inequalities) and SDG 16 (Strong Institutions).

4. Key Aspects of the Proposed Guidelines

Six Pillars of India’s AI Governance Framework

|

Pillar |

Purpose |

| Infrastructure | Build strong digital and computing infrastructure for AI research and deployment. |

| Capacity Building | Train professionals and create AI literacy among citizens. |

| Policy & Regulation | Create flexible, adaptive policies to promote innovation while managing risks. |

| Risk Mitigation | Detect, assess, and manage ethical, security, or social risks in AI. |

| Accountability | Define clear responsibilities for AI creators, users, and regulators. |

| Institutions | Establish dedicated bodies for AI governance, safety, and compliance. |

Seven Guiding Principles

- Trust is the foundation – Citizens should be able to trust AI systems.

- People-first approach – AI must serve human interests and values.

- Innovation with responsibility – Support innovation but prevent harm.

- Fairness and equity – Avoid bias and promote inclusion.

- Accountability – All actors in the AI chain must be answerable.

- Explainability – AI systems should be transparent and understandable.

- Safety and resilience – Systems should be reliable, secure, and sustainable.

Institutions and Time Frame for Implementation

- Nodal Ministry: MeitY under the IndiaAI Mission.

- Key Bodies:

- AI Governance Group (AIGG): Oversees policy implementation.

- AI Safety Institute (AISI): Develops standards, testing, and audits.

- Technology and Policy Expert Committee (TPEC): Advises on research, ethics, and regulation.

- AI Governance Group (AIGG): Oversees policy implementation.

Implementation Timeline:

- Short-term (within 1 year): Set up institutions, voluntary code of AI ethics.

- Medium-term (2–3 years): Develop risk classification, audit standards, and industry sandboxes.

- Long-term (3–5 years): Move toward a legal framework for AI governance and global alignment.

6. Challenges and the Way Forward

Challenges:

- Lack of skilled experts for AI safety and audits.

- Over-dependence on voluntary compliance instead of binding regulations.

- Difficulty in tracking AI misuse across multiple sectors.

- Balancing innovation with regulation to avoid slowing India’s AI growth.

Way Forward:

- Build a strong data ecosystem and ensure interoperability of AI systems.

- Promote public awareness and AI literacy to build trust.

- Establish mandatory audits for high-risk AI systems.

- Collaborate globally for standard-setting and ethical frameworks.

- Encourage inclusive AI development so benefits reach all sections of society.

Key Takeaways

- India’s AI Governance Guidelines focus on responsible, transparent, and inclusive AI.

- Built on 6 Pillars and 7 Ethical Principles, the framework ensures innovation with safety.

- Implementation through MeitY, AIGG, and AISI within a phased timeline.

- Success depends on capacity building, trust, and international cooperation.

One-line Wrap:

India’s AI Governance framework aims to make Artificial Intelligence not just smart—but safe, fair, and human-centred.

UPSC Mains Question:

“Discuss the significance of India’s AI Governance Guidelines (2025). How can they ensure ethical innovation while addressing emerging risks?”

Share This Story, Choose Your Platform!

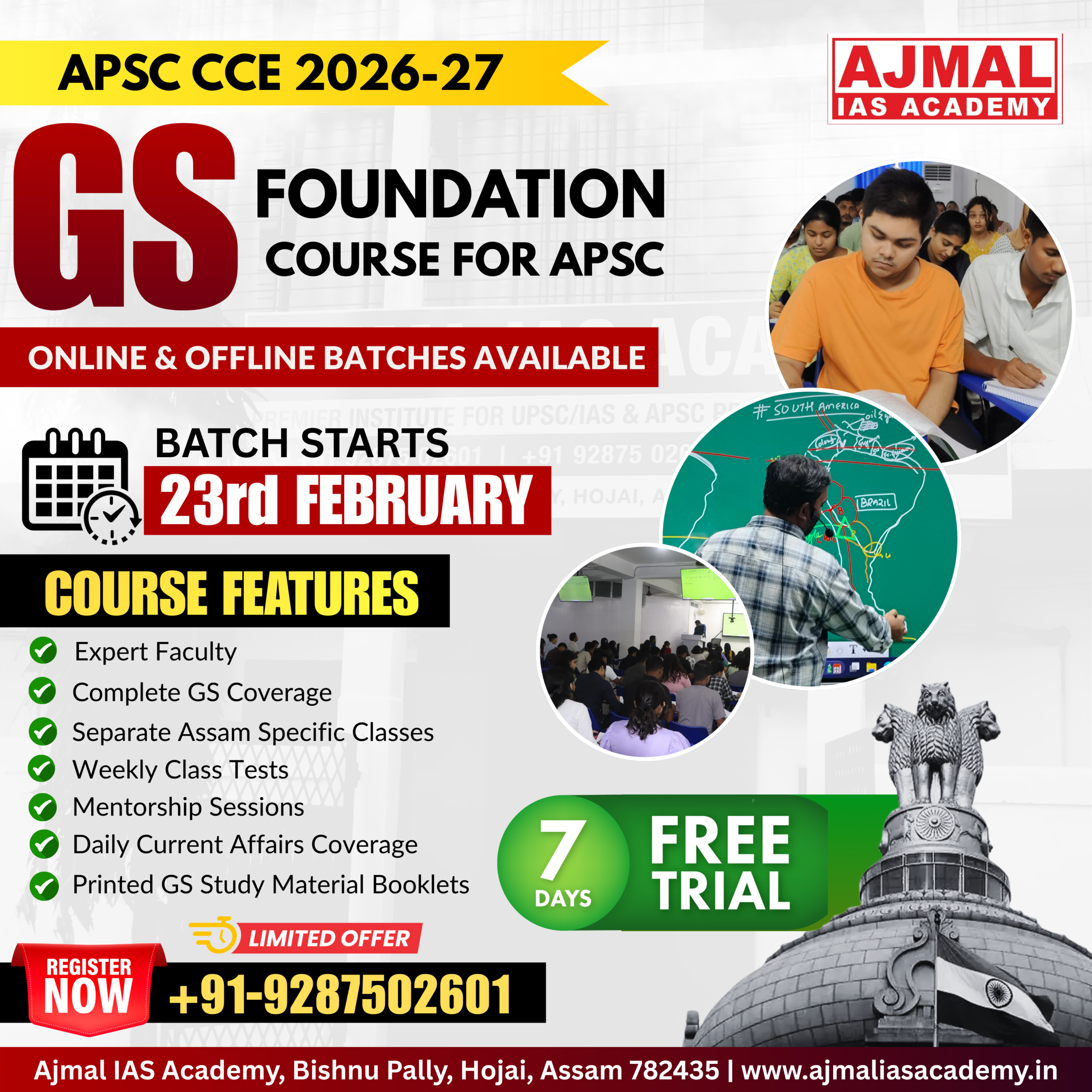

Start Yours at Ajmal IAS – with Mentorship StrategyDisciplineClarityResults that Drives Success

Your dream deserves this moment — begin it here.