Relevance: GS-2 (Governance, Child Rights) | GS-3 (Cybersecurity, Technology & Society)

Source: The HIndu ;NCPCR statements; The Print; Deccan Herald; Child Protection Frameworks

India’s apex child-rights body, the National Commission for Protection of Child Rights (NCPCR), has begun deploying artificial intelligence (AI)-based tools to detect and prevent the circulation of Child Sexual Abuse Material (CSAM).

This step comes amid a surge in online child sexual exploitation, deepfake-based abuse, grooming, and cross-border syndicates. NCPCR recently reported resolving ~26,000 complaints and rescuing 2,300+ vulnerable children over the last six months.

1. The widespread problem of CSAM

- CSAM refers to images/videos depicting sexual abuse or sexualised representation of minors.

- AI technology has enabled synthetic CSAM, where minors’ faces are morphed into explicit content.

- The dark web, encrypted platforms, VPNs, and emerging AI tools have expanded scale, anonymity, and speed of circulation.

- Reporting gaps persist—experts note that resource-poor States struggle with forensic capacity and cyber investigation.

Legal Landscape

|

2. India’s AI-Driven Response

NCPCR’s initiative includes:

- AI-powered scanning tools that detect CSAM patterns, deepfakes, grooming indicators, and repeat offenders.

- Automated flagging and triage systems to help cyber units act faster.

- Integration of 16 digital portals, enabling unified complaint tracking, data analysis, and rapid case coordination.

- Partnerships with cyber labs, State police, and child welfare committees.

This marks a shift from reactive policing to proactive detection.

3. Key Challenges & Way Forward

Challenges (Structural/Technological/Legal) | Way Forward (Governance & Policy Solutions) |

| Rapid rise of AI-generated deepfake CSAM | Amend POCSO & IT Act to include synthetic CSAM; adopt global standards (e.g., EU AI Act safeguards). |

| Overburdened cyber cells, limited forensic capacity | Expand cyber forensic labs, training for police; create specialised CSAM units in all States. |

| Inconsistent cooperation from digital platforms | Mandate faster takedown norms; enforce intermediary accountability under IT Rules. |

| Low awareness among parents, schools, and rural communities | Nationwide digital-safety campaigns; integrate child online safety into school curriculum. |

| Fragmented coordination among NCPCR, State police, CWC, and platforms | Create a National CSAM Coordination Framework with shared databases and SOPs. |

| Trauma and re-victimisation of survivors | Strengthen victim-centric procedures, counselling, safe reporting mechanisms. |

4. Significance for Governance & Child Rights

- Fulfills constitutional mandates under Articles 14, 21, 39(f) ensuring dignity and protection of children.

- Strengthens India’s compliance with UNCRC obligations.

- Enhances cybersecurity governance, integrating AI into law enforcement.

- Builds a national safety infrastructure aligned with global CSAM-tackling frameworks (e.g., NCMEC, Project Arachnid).

AI can strengthen India’s fight against online child exploitation, but its success depends on robust laws, coordination, and ethical use.

UPSC Mains Question

How can artificial intelligence strengthen India’s child-protection and cybersecurity frameworks? Discuss the legal, ethical, and institutional challenges in tackling online CSAM.

Share This Story, Choose Your Platform!

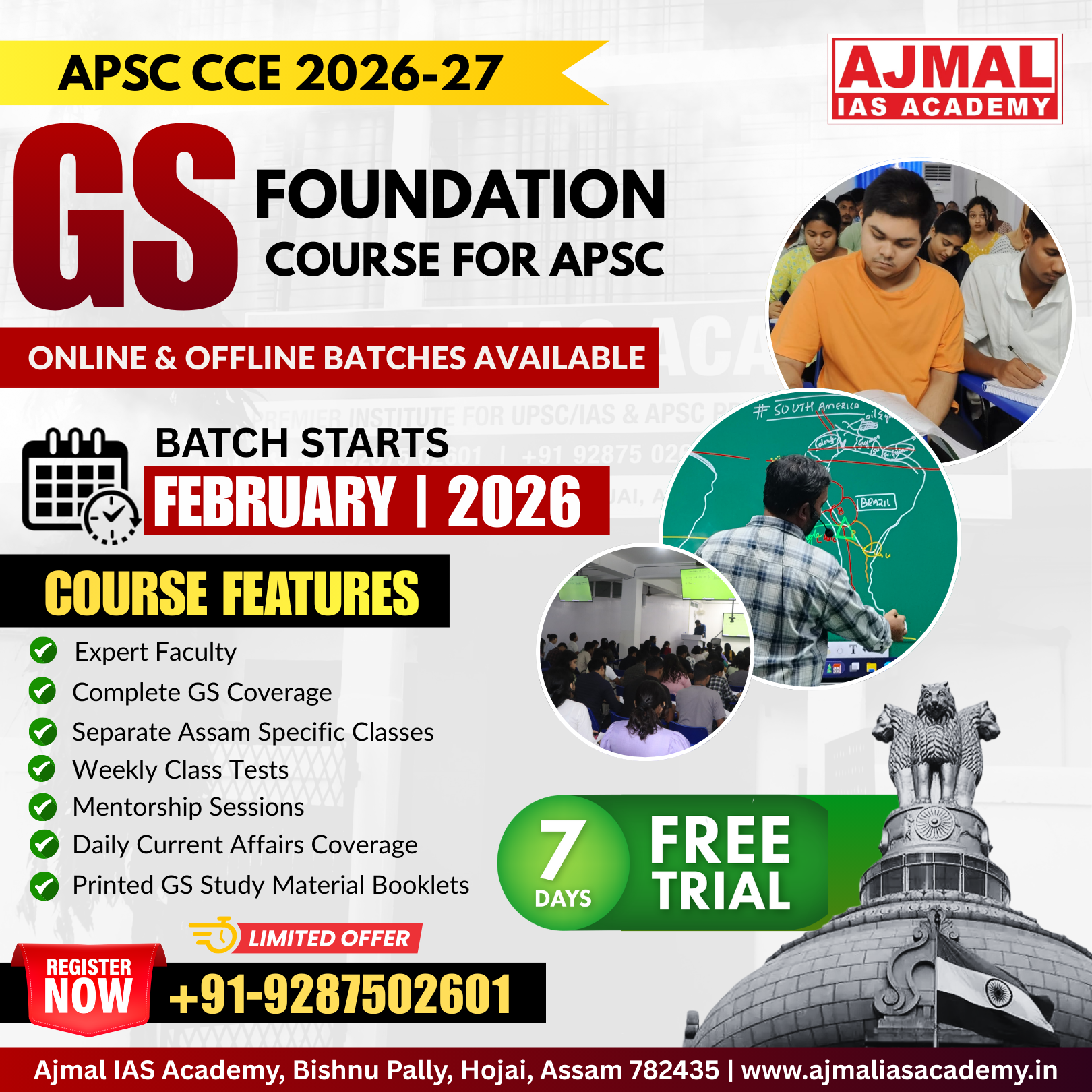

Start Yours at Ajmal IAS – with Mentorship StrategyDisciplineClarityResults that Drives Success

Your dream deserves this moment — begin it here.