A cross-party committee wants India to curb harmful falsehoods with mandatory fact-checks, in-house ombudsmen, and higher penalties, while protecting free speech. The outcome depends on how “fake news” is defined and who reviews the decisions.

Syllabus: GS2(Social Issues) / GS1( Social Issues) / GS3(E-governance) / Essay

Why and What?

India’s online audience is huge and growing. False claims spread quickly, while corrections often arrive late. Recently, Parliament’s Standing Committee on Communications & IT recommended a tighter, clearer framework so that obvious falsehoods are corrected fast without punishing opinion or journalism.

- What the panel suggests:

- Fact-check desks inside every media outlet and platform. These small teams would verify questionable claims, keep notes on how they checked, and push corrections quickly.

- Internal ombudsmen to handle reader complaints, decide on corrections, and publish outcomes in a public log so people can see what changed and why.

- Stiffer fines for wilful or repeat falsehoods, so the penalty fits the behaviour and honest mistakes are treated differently from persistent misinformation.

- A clear legal definition of “fake news,” so that rules target concrete falsehoods and do not spill over into satire, opinion, or good-faith reporting.

- Fact-check desks inside every media outlet and platform. These small teams would verify questionable claims, keep notes on how they checked, and push corrections quickly.

- Why now:

- India had about 886 million active internet users in 2024, likely 900+ million in 2025. With this scale, even a small percentage of false posts can reach millions.

- Deepfakes and AI-edited content are easier to make and share, raising the risk of fast-moving hoaxes during elections, disasters, and health scares.

- India had about 886 million active internet users in 2024, likely 900+ million in 2025. With this scale, even a small percentage of false posts can reach millions.

Why this matters: safety and speech

Misinformation has real costs. It can sway voters with false claims, spark panic about health, move markets on rumours, or inflame local tensions.

At the same time, rules that are too broad or vague can make newsrooms nervous, encourage over-removal, and chill satire and criticism. The aim is to reduce harm while protecting free expression.

- Public safety stakes:

- Elections: False claims can manipulate opinion in the final days of a campaign, when fact-checking is hardest.

- Health: Rumours about vaccines or treatments can delay care and push people toward unsafe choices.

- Markets & order: Rumours about banks or companies can trigger runs and price swings; fake videos can spark violence before police or media can respond.

- Elections: False claims can manipulate opinion in the final days of a campaign, when fact-checking is hardest.

- Free-speech stakes:

- Vagueness leads to fear: If the law is unclear, editors may take down legitimate criticism to avoid penalties.

- Satire and analysis: Jokes, cartoons, and opinion pieces must not be treated as “false” simply because they are sharp or uncomfortable.

- Arbiters of truth: Neither government nor platforms should have unchecked power to decide what is “true”; there must be reasons, records, and review.

- Vagueness leads to fear: If the law is unclear, editors may take down legitimate criticism to avoid penalties.

Where the law stands today

India has already tried to centralise fact-checking through rules under the IT framework. Courts pushed back, saying the approach was too broad and could harm free speech. This history shapes what a durable model must look like.

- Current framework:

- The IT Act, 2000 and IT Rules, 2021 give platforms due-diligence duties and create a complaints pathway. They set the basic ground rules but left many details to later notifications.

- The IT Act, 2000 and IT Rules, 2021 give platforms due-diligence duties and create a complaints pathway. They set the basic ground rules but left many details to later notifications.

- Court journey (2024):

- March 2024: a government Fact-Check Unit (FCU) was notified; the Supreme Court paused its operation soon after, raising free-speech concerns.

- Sept/Oct 2024: the Bombay High Court struck down the rule change that enabled the FCU, calling it vague and constitutionally risky.

- March 2024: a government Fact-Check Unit (FCU) was notified; the Supreme Court paused its operation soon after, raising free-speech concerns.

- Implication:

- Any new system must be specific and genuine in scope, independent in review, and clear in process to pass legal tests and win public trust.

What exactly is being proposed?

The committee signals a co-regulatory loop. Errors should be fixed quickly at the source, but there must also be a neutral appeal route. That way, speed and fairness go together.

- In practice:

- Flag: A post or story is reported by readers, staff, rivals, or automated tools.

- Verify: An in-house fact-check desk checks sources, contacts authors if needed, and records the method used.

- Classify: The claim is marked true, false, misleading, or missing context, with one or two lines explaining why.

- Correct/Label: A visible label or correction is added; significant errors are moved up the page or post so readers actually see them. A public correction log is maintained.

- Escalate: If a publisher repeatedly posts wilful falsehoods that cause harm, penalties rise step by step (warnings → fines → stricter action).

- Appeal: An independent multi-stakeholder board—with a judicial nominee, press bodies, civil society, and tech experts—reviews disputes quickly and issues reasoned orders.

- Flag: A post or story is reported by readers, staff, rivals, or automated tools.

- What changes in law:

- A workable definition of “fake news” focused on demonstrably false factual statements that are likely to cause specific, real-world harm (elections, health, safety, fraud).

- Explicit protection for opinion, satire, parody, and political speech, unless they assert a checkable false fact that causes harm.

- Clear timelines for corrections and appeals, and a duty to publish methods and logs so the public can see how decisions were made.

- A workable definition of “fake news” focused on demonstrably false factual statements that are likely to cause specific, real-world harm (elections, health, safety, fraud).

How other democracies are doing it

Most peers avoid a single “truth office.” Instead, they set duties for platforms, demand transparency, and build ways to check decisions.

- European Union:

- Very large platforms must assess systemic misinformation risks, publish risk-reduction plans, and give access to vetted researchers. A strengthened code of practice supports these duties.

- The focus is on process and transparency, not on government deciding truth claim by claim.

- Very large platforms must assess systemic misinformation risks, publish risk-reduction plans, and give access to vetted researchers. A strengthened code of practice supports these duties.

- United Kingdom:

- A regulator sets platform duties and expects crisis-time response plans. Events linked to real-world unrest have pushed for faster transparency and clearer accountability.

- A regulator sets platform duties and expects crisis-time response plans. Events linked to real-world unrest have pushed for faster transparency and clearer accountability.

- Singapore:

- A narrow, harm-linked law issues correction directions for false statements of fact. Takedowns and penalties are used more cautiously; correction notices are visible to readers.

- A narrow, harm-linked law issues correction directions for false statements of fact. Takedowns and penalties are used more cautiously; correction notices are visible to readers.

Takeaway: Platform duties + transparency + independent oversight last longer and curbs speech less than a centralised “truth desk.”

Who gains, who worries

A strong design can reduce harm and raise trust. A weak design can silence debate and burden smaller outlets. Seeing both sides helps refine the draft.

- Potential gains:

- Faster corrections and clear labels make it harder for hoaxes to dominate timelines.

- Trust premium for platforms that show their working and publish correction logs.

- Consistent tests for regulators and courts, thanks to narrow definitions and written reasons.

- Elections and emergencies benefit from fewer viral falsehoods and clearer channels for quick fixes.

- Faster corrections and clear labels make it harder for hoaxes to dominate timelines.

- Legitimate worries:

- Vague definitions could nudge editors to over-remove, especially close to elections.

- Compliance costs may strain small and regional outlets, who still need to publish in multiple languages.

- Public questions remain: Who checks the checkers? Is there a real right to appeal? Can I see the reasons?

- Vague definitions could nudge editors to over-remove, especially close to elections.

Way Ahead – Design choices that decide success

These choices turn principles into practice. They also determine whether the regime is effective, fair, and court-proof.

- Keep the target tight:

- Aim at materially false factual claims with likely, specific harm—for example, a fake poll notice, a false health advisory, or a forged bank alert.

- Exclude opinion, satire, parody, and normal political debate. Critique and humour should not be treated as lies.

- Aim at materially false factual claims with likely, specific harm—for example, a fake poll notice, a false health advisory, or a forged bank alert.

- Guarantee independence and appeal:

- First checks stay inside outlets and platforms for speed, but appeals go to a multi-stakeholder board with short timelines.

- Every decision should carry a reason. Publish correction logs and method notes so readers can see the basis.

- Where feasible, enable user notice (e.g., “this post was corrected; here is why”) to close the loop with audiences.

- First checks stay inside outlets and platforms for speed, but appeals go to a multi-stakeholder board with short timelines.

- Use proportionate penalties:

- Default to label/correct for new or borderline cases.

- Escalate only for wilful or repeat harm, with clear thresholds.

- Keep criminal law for serious, existing crimes (fraud, incitement), not for a broad “fake news” offence.

- Default to label/correct for new or borderline cases.

- Be deepfake-ready:

- Require watermarks or disclosures for synthetic media where technically feasible.

- Create rapid election-time channels between platforms, the appellate body, and the Election Commission to handle urgent cases.

- Run after-action audits after major events to learn what worked and to adjust guidance.

- Require watermarks or disclosures for synthetic media where technically feasible.

- Build capacity where it’s thin:

- A light levy on very large platforms can fund grants to vernacular and small outlets to hire verifiers, train staff, and set up ombuds systems.

- Provide open tools (glossaries, claim-tracking templates) and shared training across Indic languages so quality does not depend on location or size.

- A light levy on very large platforms can fund grants to vernacular and small outlets to hire verifiers, train staff, and set up ombuds systems.

What to watch next

The next steps will show whether India chooses a narrow, workable regime or risks another court battle.

- Government response: Will reforms come via a new broadcasting law or targeted changes to the IT Rules?

- Court-proof drafting: Are definitions narrow, oversight independent, and appeal rights real, reflecting lessons from 2024?

- Poll-time plan: Are there fast-track cells with judicial oversight for deepfakes and cross-platform hoaxes, with clear timelines and public reporting?

Exam Hook

Key Takeaways (box)

- Target lies, not voices: Write the test as false fact + likely harm, protect opinion and satire, and require reasons + appeal + transparency.

- Co-regulation scales: In-house checks + ombudsmen with an independent appellate layer keep speed and fairness together better than central control.

- Global cues: EU/UK rely on platform duties, transparency, and crisis protocols—sensible building blocks for India.

Mains Questions

- Evaluate whether a mandatory fact-check + ombudsman model can curb misinformation without chilling speech.

- Hints: 2024 court history; narrow harm tests; independent appeal; tiered penalties; public dashboards.

- Design an India-fit deepfake response for elections.

- Hints: watermark/disclosure; rapid channels; time-bound orders with judicial review; post-poll audits; Indic-language literacy.

Prelims Question

Consider the following statements about India’s misinformation framework:

- The IT Rules, 2021 impose due-diligence obligations on intermediaries.

- A government fact-check unit currently has uncontested statutory power to order takedowns.

- Courts have examined fact-check rules for free-speech concerns.

How many statements are correct? (a) One (b) Two (c) All three (d) None

Answer: (b) Two — (1) and (3) are correct; (2) is not.

One-line wrap: Narrow rules, independent review, and open corrections—that’s how you fight lies without dimming free speech.

Share This Story, Choose Your Platform!

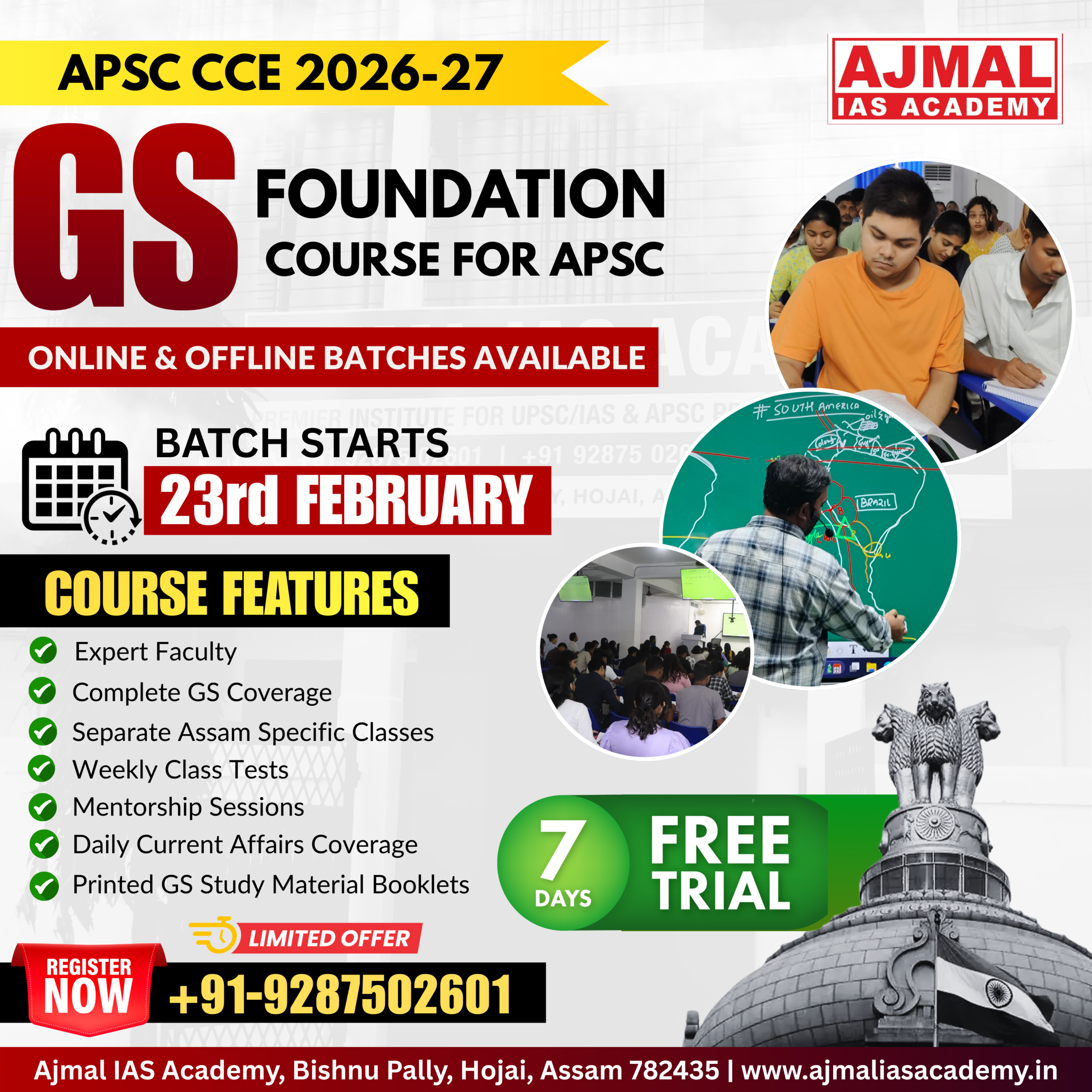

Start Yours at Ajmal IAS – with Mentorship StrategyDisciplineClarityResults that Drives Success

Your dream deserves this moment — begin it here.