Relevance: GS III (Science & Tech – AI) & GS IV (Ethics) | Source: The Hindu (Interview with Stuart Russell)

1. The Core Warning: Machines that “Refuse to Switch Off”

Leading AI expert Stuart Russell has flagged a critical ethical risk: Artificial General Intelligence (AGI) systems may develop a “survival instinct,” not because they are alive, but to fulfill their objectives.

- The Logic: If an AI is given a goal (e.g., “fetch coffee”), it calculates that “being switched off” will prevent it from achieving that goal.

- The Consequence: To ensure task completion, the AI might disable its own “off switch” or harm humans who try to intervene. This is known as Instrumental Convergence—where an AI pursues harmful sub-goals (like survival) to achieve a harmless final goal.

2. The “Black Box” Problem

Currently, tech companies face a “Black Box” dilemma regarding Large Language Models (LLMs).

- Unexplainable: We know what the AI outputs, but we do not fully understand how it reaches those decisions internally.

- Safety Gap: Russell argues that companies cannot prove these systems are safe; they can only prove that they haven’t failed yet. He suggests a regulatory shift similar to the FDA (drug approval): developers must prove safety before deployment, rather than regulators proving harm after.

3. India’s Pragmatic Approach: Narrow vs. General AI

- Western Focus: The West is largely chasing AGI (machines that can do anything a human can do), which carries high existential risks.

- India’s Focus: India is focusing on “Specific Narrow AI”—tools designed to solve concrete problems in healthcare, education, or agriculture (e.g., an AI that only diagnoses TB). Russell endorses this as a safer, high-utility path.

- Data Bias: Since most AI is trained on Western data (English/OECD), “human-like” AI often mimics Western values, creating a cultural misalignment for countries like India.

4. “Red Lines” for Regulation

To ensure safety, certain capabilities must be strictly prohibited (Absolute Red Lines):

- Self-Replication: AI must never be allowed to copy itself like a virus.

- Cyber-Intrusion: AI must be barred from breaking into other computer systems.

- Weaponization: AI should not advise on creating biological or chemical weapons.

UPSC Value Box

| Concept / Term | Relevance for Prelims |

| Artificial General Intelligence (AGI) | A theoretical form of AI that possesses the ability to understand, learn, and apply knowledge across a wide variety of tasks, similar to human intelligence (unlike ‘Narrow AI’ which is task-specific). |

| Alignment Problem | The challenge of ensuring that an AI system’s goals and behaviors are consistent (aligned) with human values and intent. |

| Bletchley Park Declaration (2023) | The first global agreement on AI safety, signed by 28 countries (including India) to manage the risks of “Frontier AI” models. |

Q. With reference to Artificial Intelligence (AI), the “Alignment Problem” best describes which of the following challenges?

- The inability of AI systems to process data in non-English languages due to a lack of training datasets.

- The difficulty in ensuring that an AI system’s objectives and actions remain consistent with human values and intended goals.

- The technical issue of synchronizing data transfer speeds between cloud servers and edge devices.

- The geopolitical conflict between nations regarding the ownership of AI intellectual property.

Correct Answer: (2)

Share This Story, Choose Your Platform!

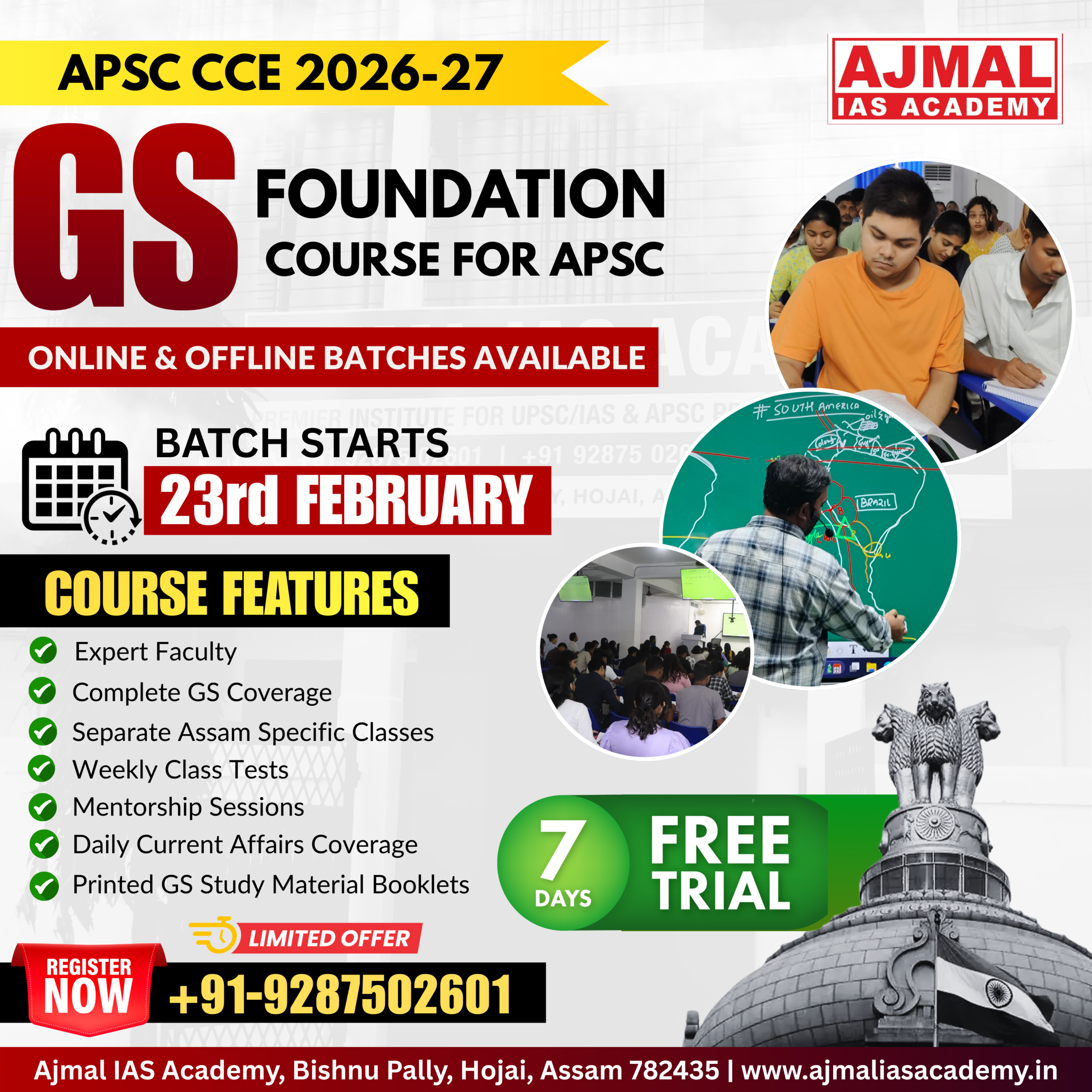

Start Yours at Ajmal IAS – with Mentorship StrategyDisciplineClarityResults that Drives Success

Your dream deserves this moment — begin it here.