Science and Technology, Artificial Intelligence, Ethics, Education, Economy (Innovation & R&D), and Current Affairs.

With the right training setup, a model can learn habits like “pause, check, and correct” largely on its own. This was done using rewards for both the final answer and the way the answer was reached, rather than by feeding it huge piles of step-by-step human solutions.

What is new here

Most language models learn by reading massive amounts of human text. They often become very fluent, but they may jump to an answer too quickly, copy human mistakes, and struggle on problems that need several steps of thinking. The DeepSeek-R1 project set out to change that by shaping the model’s habits.

- Reinforcement learning: training by trial and feedback. The model tries a path, gets a reward when it does something good, and learns to repeat that behaviour.

- Policy: the model’s strategy for choosing its next step. Rewards nudge this strategy toward useful habits.

- Process reward vs final reward: a final reward is given when the last answer is correct; a process reward is given when the way the model worked looks sensible—pausing to think, running a quick check, correcting itself.

- Reflection and verification: reflection means pausing to ask “does this make sense?”; verification means testing the result (for example, plugging a number back into an equation or running a small code snippet).

- Generalisation: using what was learned on new problems the model has not seen.

What the team changed: instead of fine-tuning on many human-written solutions, they rewarded good reasoning behaviour during training. Over time, the model began to use phrases like “wait” or “let us check” on its own, shortened wasteful chains on easy questions, and wrote longer, more careful chains on hard ones.

How the training actually worked

- Start with a strong base model. It could already read and write in natural language and code.

- Give it two channels: one for thinking steps (hidden from the user) and one for the final answer (what the user sees).

- Let it try, then score both outcome and process.

- If the final answer matched the truth, that earned reward.

- If the steps showed good habits—like checking a claim, using a simple test, or correcting a slip—that also earned reward.

- If the final answer matched the truth, that earned reward.

- Discourage bad paths, encourage better ones. If a chain went in circles or produced contradictions, the reward fell. If a chain converged, the reward rose.

- Repeat at scale. Many training rounds taught the model to value careful behaviour because careful behaviour paid.

What changed during training

- Chains became more focused. The model spent effort where it mattered and stopped over-talking when a short path was enough.

- The model began to self-correct without being told to do so.

- The writing style shifted from “confident first guess” to “work it out, check, then answer.”

How it was tested

- On tough school-level mathematics contests, scores rose from roughly mid-teens at the start to well above three-quarters during training, and later crossed the mid-eighties.

- On mixed reasoning suites (maths, programming, following instructions), the model also improved strongly, with the biggest gains on tasks that reward careful checking.

Why this matters

Less dependence on human labels. Earlier systems often needed large, curated sets of human solutions that show every step. That is expensive, slow, and can carry human bias. Reward-based training reduces this dependence by letting the model learn from what works rather than copy how a human wrote it.

Better habits, closer to how careful people work. The model learned to pause, test, and only then answer. That is what teachers ask students to do; it is also what we expect from any tool used in science, policy, law, finance, and public service.

More useful in real workflows.

- In education, a tutor that shows steps, checks a student’s move, and suggests the next hint is more helpful than one that just spits out an answer.

- In coding, a helper that runs a quick unit test before replying saves time.

- In policy or law, a drafter that lists reasons, evidence, and weak links makes review easier.

- In public service, a bot that explains each decision and logs its checks can improve trust.

Safety hooks. Training a model to verify can also teach it to refuse when facts are missing or a request could be unsafe. This is not a full safety solution, but it is a useful building block.

Strengths, limits, and trade-offs

Strengths

- Teaches careful habits with far fewer human-written solutions.

- Works across subjects: mathematics, coding, and multi-step questions.

- Adjusts effort: short chains for easy tasks, longer chains for hard ones.

- Produces steps that humans can read and audit.

Limits and risks

- Compute and energy: reinforcement learning with exploration can be heavy and costly. Training must be designed to waste less effort.

- Reward design: if rewards are weak, the model may “game” them—writing long, showy chains that look thoughtful but do not truly check anything.

- Truth still needs ground: a careful chain can still be wrong if the underlying facts are wrong. Reliable sources and human review remain essential.

- Misuse risk: stronger planning also needs stronger safeguards, audit logs, and strict use rules so the model is not guided into harmful tasks.

- Human role changes, not vanishes: fewer people may be needed to label every step, but more will be needed to design rewards, test safety, and review outputs in sensitive settings.

Bottom line: this method moves the needle from “memorise and speak” to “work and check,” but it must be paired with good data, good tools, and good guardrails.

What India can do with this (policy, industry, classrooms)

Education

- Build low-cost reasoning tutors in Indian languages that show steps, check student work, and offer the next hint.

- Use reward-trained models to generate practice sets that adapt to a student’s level and explain the solution path.

Public services

- Add “explain and verify” helpers to government portals. Each decision can show the rule applied, the data used, and a quick consistency check, with a clear escalation to a human when facts are missing.

Research and startups

- Focus on better training method rather than chasing the largest dataset. Smart reward design can narrow the gap with far fewer labels.

- Use tool use during the model’s thinking phase—calculators, code sandboxes, and small databases—to raise accuracy further.

Standards and safety

- Define tests for reasoning quality (did the model check?) and responsible behaviour (did it refuse unsafe steps?).

- Support efficient data centres and green power, since this training style uses more compute.

Exam hook

Teach habits, not just answers. DeepSeek-R1 shows that reward-based training can build reflection, verification, and adaptability without heavy reliance on human-written solutions. The shift is from “feed more examples” to “shape better behaviour,” which matters for education, coding, public services, and safe use of artificial intelligence.

Key takeaways

- Reinforcement learning can teach a model to pause, test, and correct—not just talk.

- Giving process rewards alongside final rewards changes behaviour in a useful way.

- Gains are strongest on tasks that truly need several steps of reasoning.

- The approach reduces need for hand-written step-by-step data, but it needs careful reward design, strong safety checks, and efficient compute.

- India can apply this in schools, government portals, and startups, backed by clear standards and energy-smart infrastructure.

UPSC Mains question

Question:

“Reinforcement learning can shift artificial intelligence from pattern recall to active reasoning.” Explain with reference to DeepSeek-R1. In your answer:

(a) define outcome and process rewards;

(b) show how reflection and verification improved results in maths and coding;

(c) discuss risks around compute cost, reward design, and safe public deployment;

(d) suggest one policy move for Indian classrooms and one for government portals.

Hints to structure: definition → method steps → examples of habits learned → benefits → limits and safeguards → two India-specific actions → close.

One-line wrap

When you reward a model for how it thinks as well as what it answers, it starts to reason—pause, check, and correct—much like a careful student.

Share This Story, Choose Your Platform!

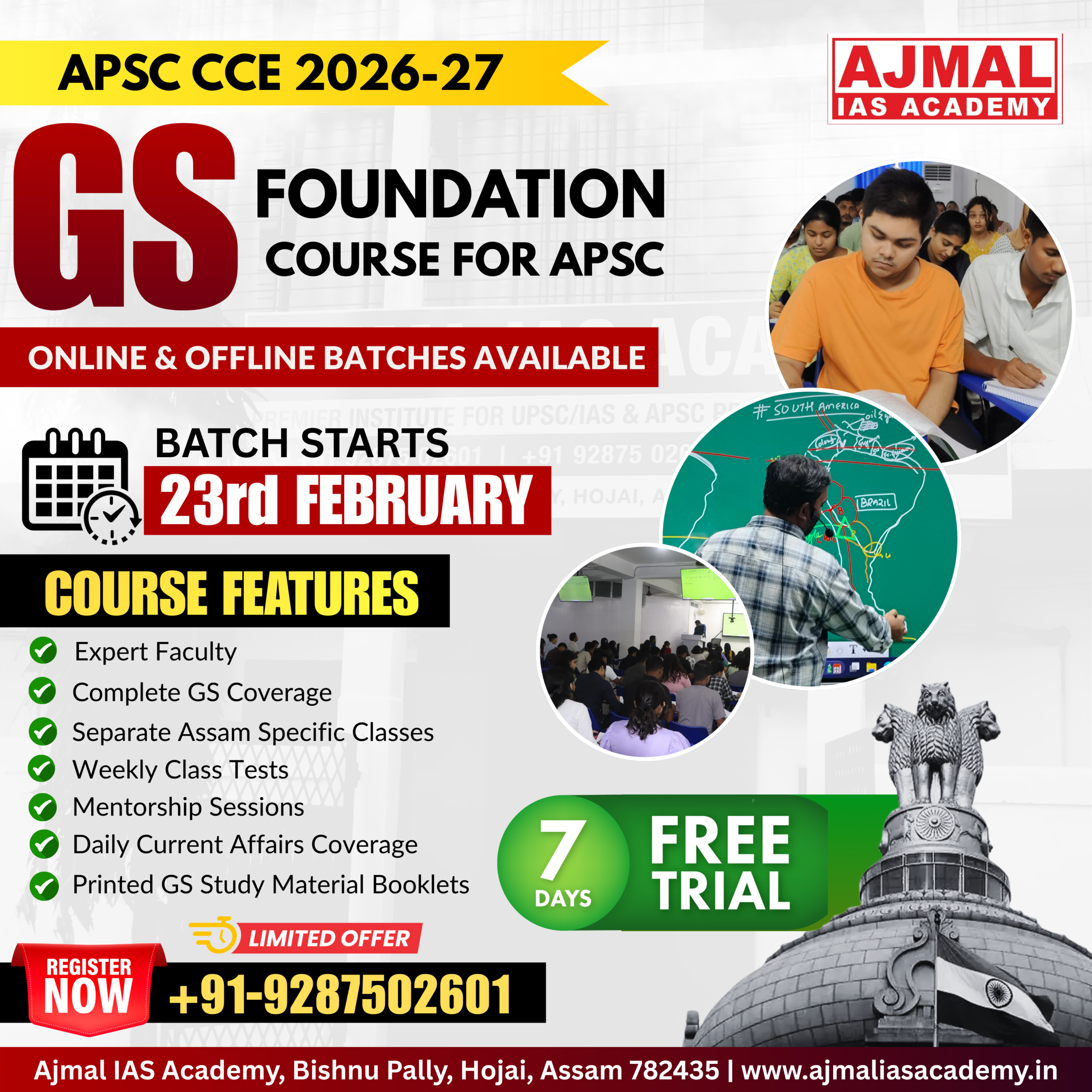

Start Yours at Ajmal IAS – with Mentorship StrategyDisciplineClarityResults that Drives Success

Your dream deserves this moment — begin it here.