Economy, Ethics, Science and Technology, Labour Laws, Social Justice, and Current Affairs.

What is going on

On our screens, artificial intelligence looks fast, clean and almost magical. Behind the screen, it runs on a large and mostly invisible human workforce. These workers label images, read and tag text, listen to and type audio, moderate violent or sexual content, rank answers, and test safety.

- Many live in developing countries and work through online platforms. Reports from Africa and Asia show pay as low as less than two United States dollars per hour, strict time targets measured in seconds, unstable contracts, and serious mental stress after seeing disturbing material for many hours a day.

- They are sometimes called “ghost workers” because users never see them, yet the systems depend on them.

- This hidden labour trained and now maintains the chat tools that answer questions, the image tools that draw pictures, the voice tools that speak, and the filters that try to keep harmful content away. Without this human effort, most of today’s artificial intelligence would not work reliably in daily life.

What is artificial intelligence

Simple meaning: Artificial intelligence is a way of making machines learn from examples so that they can recognise patterns and make useful predictions or create new content.

Main families you should know

- Narrow artificial intelligence: Systems trained to do one type of task very well. Examples: face unlock on a phone, language translation, route suggestion in traffic, medical scan reading. This is the dominant form today.

- General artificial intelligence: A still-emerging idea where a single system can learn any mental task that a human can learn, shift across domains, and reason with common sense. We are not there yet, but some modern systems are moving closer in limited ways.

- Super artificial intelligence: A future possibility where machine intelligence is far beyond human ability across most tasks. This raises deep safety, labour, and governance questions, because such a system could change economies very quickly.

Main forms used today :

- Recognition systems: find faces in pictures, read number plates, detect spam messages, sort medical scans.

- Prediction and ranking systems: show which product or video you may like, suggest routes in traffic, flag possible fraud in finance.

- Generative systems: write text, create images, produce audio and video, and write computer code after learning from very large amounts of public and private data.

- Robotic systems: use sensors and software to carry out physical tasks such as moving goods in a warehouse or guiding a vehicle.

What can it do well?

- Repeatable pattern work at scale: sorting, matching, summarising, translation across many languages, first-level customer support.

- Fast search through huge data sets: quick answers and suggestions.

- Assist professionals: draft a note, outline a judgment summary, propose a design, suggest code, help read a scan.

What it is good at, and how it is changing work

- Where it replaces human effort: first-draft writing, routine data entry, basic editing, transcription, simple customer chat, first-level technical support.

- Where it assists humans: doctors reading scans, teachers preparing lessons, lawyers and judges summarising past cases, police sorting evidence, officials drafting notes, designers and writers brainstorming ideas.

- Where it should not replace humans (for now): final decisions that affect life, liberty, money or public order. Granting or denying bail, selecting welfare beneficiaries, approving medical treatment, or policing should keep a human in charge with full responsibility.

Where the unseen labour fits in

A machine does not understand the world by itself. People teach it.

- Data labelling: Workers mark what is inside pictures (for example, pedestrian, traffic sign, pothole), tag the meaning of sentences, and identify harmful speech. The quality and fairness of the output depend on how well and how honestly this labelling is done.

- Human feedback on answers: After a system generates answers, workers rank and correct them. This fine-tunes behaviour and removes easy tricks.

- Content safety and cleaning: Moderators remove graphic, hateful or sexual content, write rules for filters, and test them. Many do this for six to eight hours a day. Long exposure is linked with anxiety, depression and post-traumatic stress.

- Voice and gesture recording: Actors record thousands of lines and movements so that systems can speak naturally and recognise actions.

- Evaluation and red-team testing: People try to break the system to expose bias, cheating, privacy leaks or dangerous instructions, so that developers can fix them.

Common risk patterns across this chain

- Pay that can be below local living standards; sudden termination without notice.

- Tight quotas (often measured in seconds per task); constant screen surveillance.

- Non-experts asked to label sensitive medical or legal data.

- Children or students pulled into gig tasks through family accounts.

- Weak complaint channels; union efforts sometimes discouraged.

- Workers spread across many tiny contractors, which hides responsibility.

Debates around artificial intelligence

Benefits (why societies are adopting it)

- Speed and scale: translation for classrooms across many languages; instant summaries for citizens; faster help for farmers and small shops.

- Lower cost of access: legal information, health guidance and government help can reach remote areas.

- New jobs and tools: safety testing, product design, local-language content creation, small business automation.

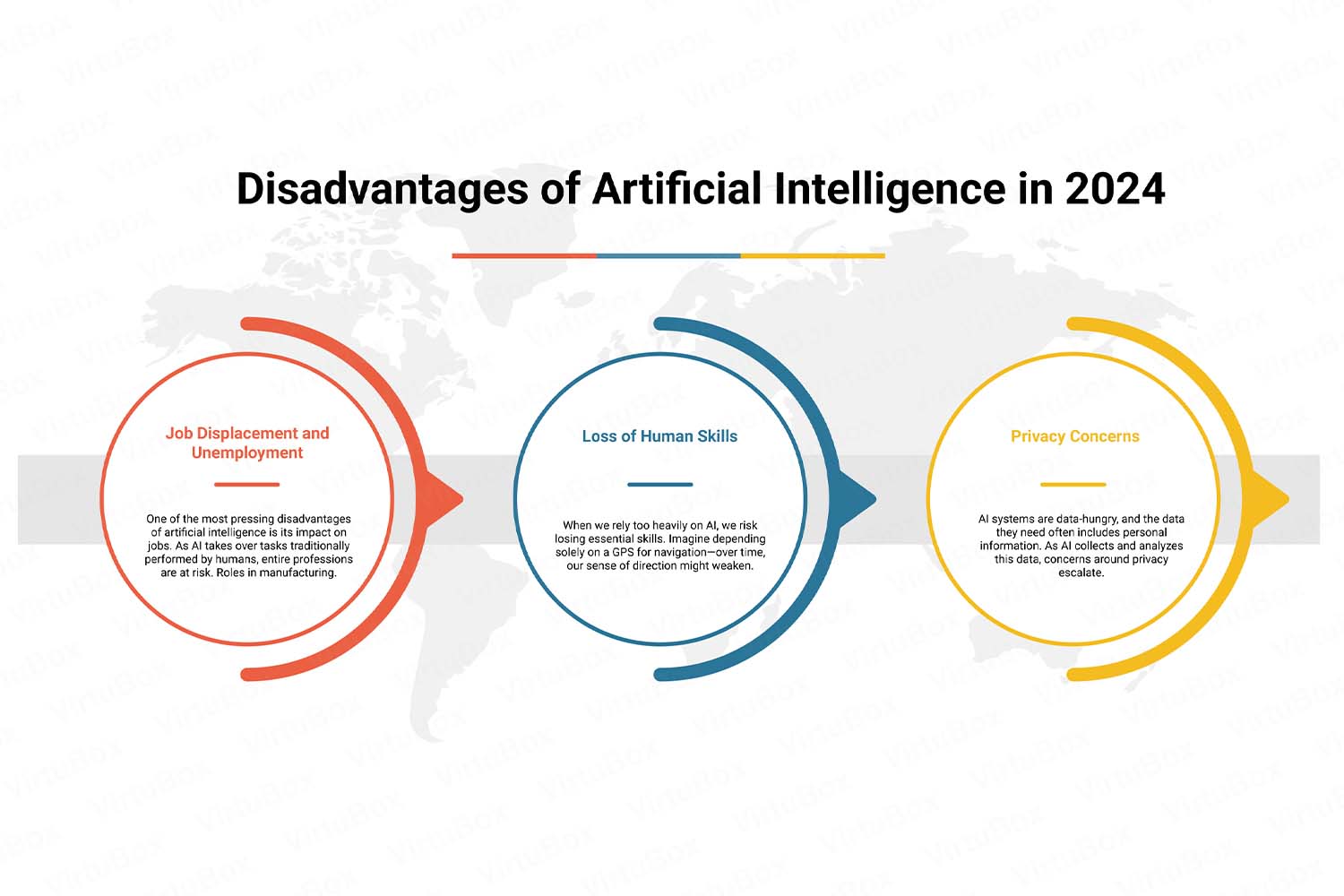

Harms and worries (why caution is needed)

- Labour exploitation: hidden workers carry the burden, big firms capture most of the value.

- Bias and unfair outcomes: if training examples carry social bias, outputs can hurt weaker groups.

- Opacity: people do not know which data were used, who labelled them, or how a decision was made.

- Consent and copyright: creators and citizens may not have agreed to let their work or personal data train a system.

- Environmental cost: training and running large systems use significant electricity and water; data centres raise local stress on rivers and power grids.

- Job displacement and deskilling: routine roles shrink; mid-skill roles change quickly; without training many workers can be left behind.

What should be done now

For companies

- Name the supply chain: publish where data came from, who labelled it, and what tests were run for bias and safety.

- Pay a living wage: link pay to local cost of living; pay on time; no forced overtime.

- Protect mental health: rotate workers away from harmful material; provide paid counselling and recovery time.

- Ban child labour and forced work: use independent audits and surprise checks; publish results.

- Safety by design: test for bias and misuse before launch; keep a plain-language help and complaints window for users and workers.

For governments

- Fair work standards for online labour: minimum pay, written contracts, social security, and the right to organise for all digital workers.

- Data protection and consent: clear rights to see, correct or delete personal data; strong penalties for misuse; fast notice to victims when data are leaked.

- Registers of high-risk systems: if a tool affects rights and services, make test results and audits public.

- Public capacity: shared testing labs, open tools, and large-scale training for schools, colleges and local governments.

- Green rules: disclose electricity and water use; reward efficient models and efficient data centres.

For universities and civil society

- Independent audits of labour conditions and bias.

- Ethics and data rights in all technical courses.

- Helplines and legal aid for exploited digital workers.

For individuals

- Be mindful of what you upload; it can become training data.

- Ask for a human review when a machine-made outcome affects your rights or benefits.

- Prefer services that publish fair work and safety policies.

Looking ahead: if general or super-level systems appear

- Human control: keep a human in final charge wherever decisions affect life, liberty, money or public order. Machines can suggest; humans must decide and explain.

- Layered safety: build tools that explain their steps; add independent watch systems that monitor dangerous behaviour; create strong “stop switches”.

- Plan for failure: run drills; practise shutdown and recovery; make it a legal duty to pause deployment when serious harm is likely.

- Compute and model governance: require licences for very large training runs; record who trained what, with which data, and for what purpose.

- Global standards: agree on shared labour rules, shared safety tests, and shared reporting so that firms cannot shift harm to weaker regions.

- Mass education: teach critical thinking, data rights and prompt-based working to every student and worker; support workers to move into higher-skill roles.

Artificial intelligence is human effort multiplied by machines. The clean screen hides a chain of workers who make it possible. If we want the benefits—better access, lower costs, new tools—then we must also clean the labour chain, protect privacy and consent, reduce bias and environmental harm, and keep human judgment in command where it matters most. That is how societies can be both modern and just.

Exam hook

“Artificial intelligence looks automatic, but it runs on invisible human labour. A fair path must join living wages, mental-health care, clear consent, and human-in-charge rules, while keeping open the door for useful innovation.”

Key takeaways

- Today’s systems depend on millions of human tasks: labelling, moderation, feedback and testing.

- Documented cases show pay below two United States dollars per hour, strict second-wise quotas, and mental health injuries.

- Benefits are real (speed, access, new tools), but harms are also real (exploitation, bias, opacity, consent conflicts, environmental cost, job change).

- Practical fixes exist now: fair work rules, data rights and audits, safety testing before launch, green reporting, and a human in final control for high-risk uses.

- For the future, prepare for stronger systems with licences for very large training runs, global standards, and mass education.

Mains question

Question: “Unseen labour is the engine of modern artificial intelligence.” Critically examine. Suggest a policy package that protects workers and citizens and keeps innovation alive.

Hints to use: define the hidden supply chain; give two tasks (labelling, moderation) with a number (for example, pay below two dollars per hour or thousands of items per day); balance benefits and harms; list remedies (living wage, mental-health care, audits, data rights, green rules, human-in-charge); end with global standards and education.

One-line wrap

Make the machine smart, but keep the people safe, fairly paid, and in charge where it truly matters.

Share This Story, Choose Your Platform!

Start Yours at Ajmal IAS – with Mentorship StrategyDisciplineClarityResults that Drives Success

Your dream deserves this moment — begin it here.